Mediators

2025-02-25

Participatory Collective Intelligence for Multi-Stakeholder Urban Decision-Making

Introduction

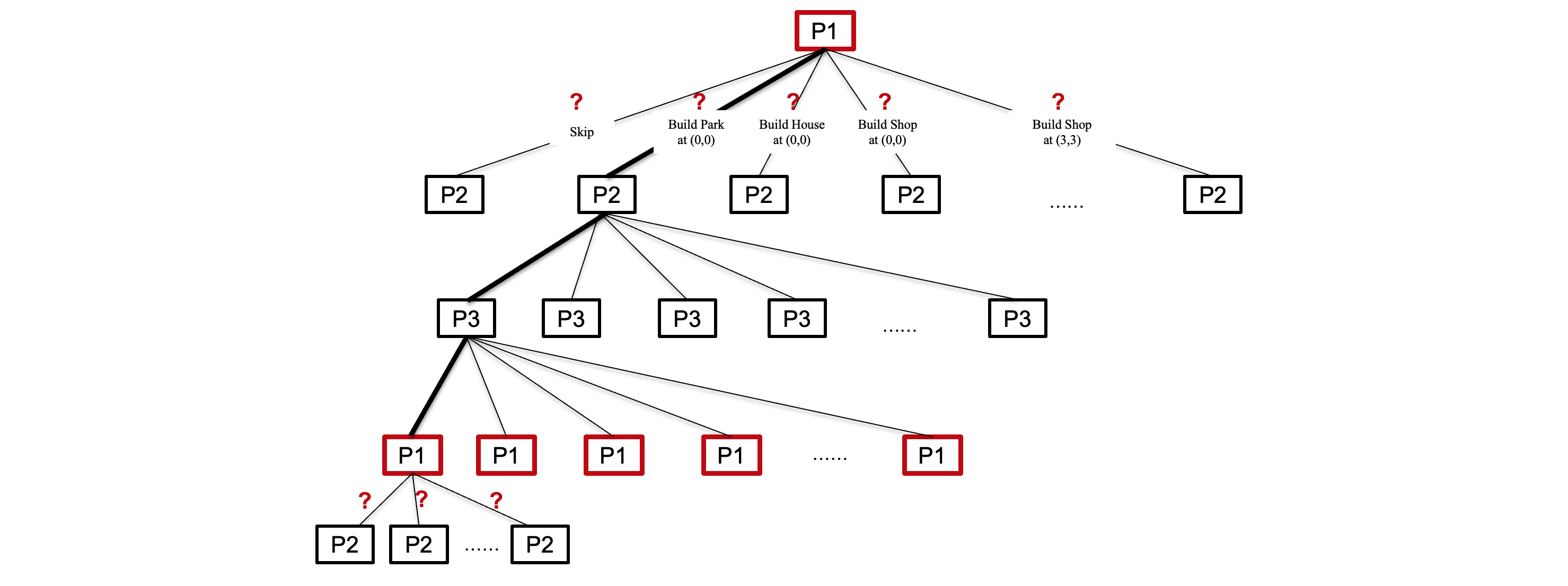

Decision-making often involves multi-stakeholder agreement. The hardest step is to reach consensus, driven by negotiations and exchanges of interests between stakeholders. The urban environment is inherently a complex, multi-dimensional design challenge. Unlike engineering problems with well-defined constraints and objectives, urban issues often navigate with ambiguity, conflicting interests, and deep-rooted value tensions among diverse stakeholders, making agreements difficult to achieve. Essentially, design is a practice to reach consensus. This thesis focuses on how collaboration between humans and AI can support a collaborative value through discussion, negotiation and co-learning. It explores a new interface that enables participatory, interpretable, and consensus-oriented decision-making by facilitating effective collaboration between human stakeholders and AI agents.

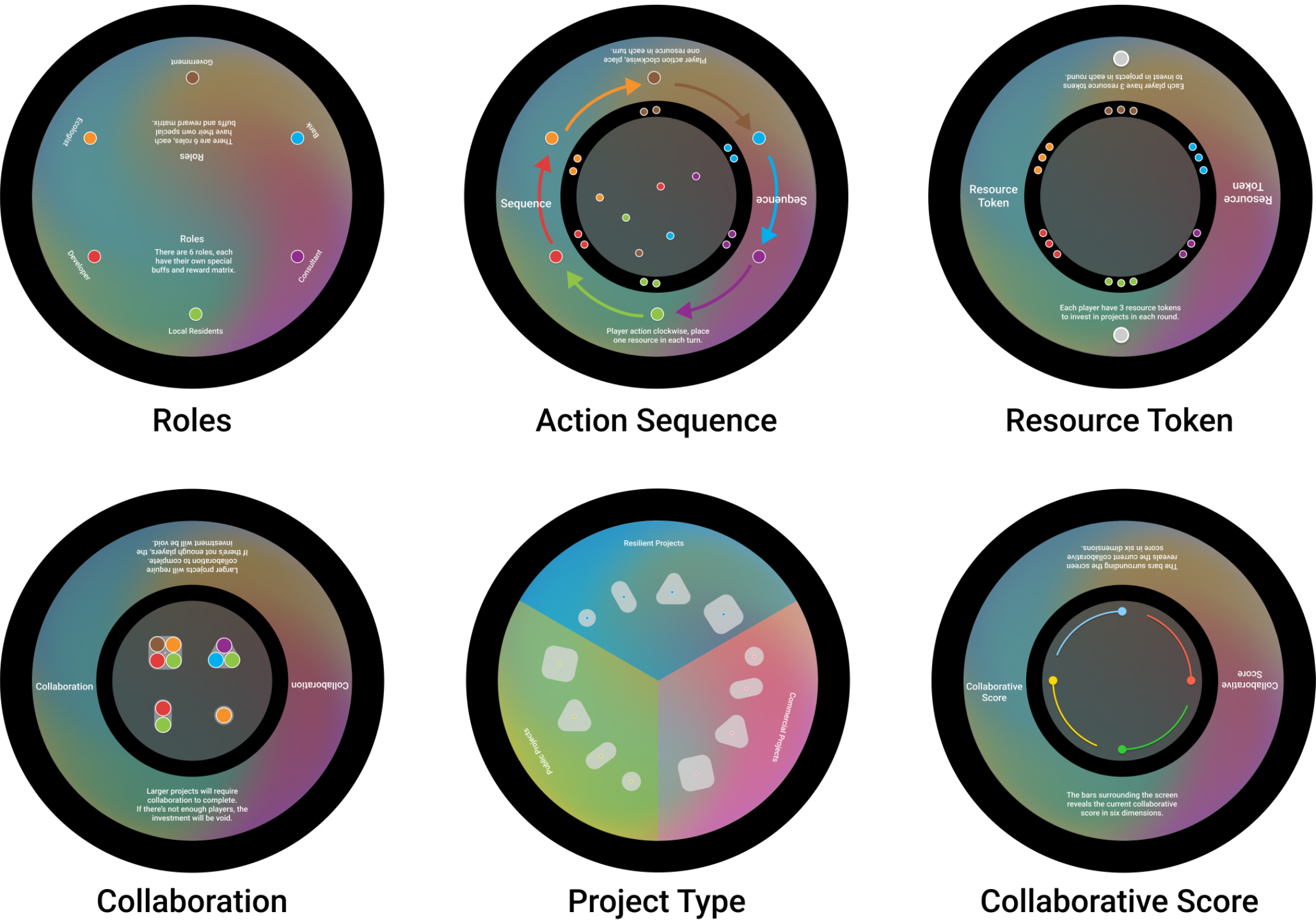

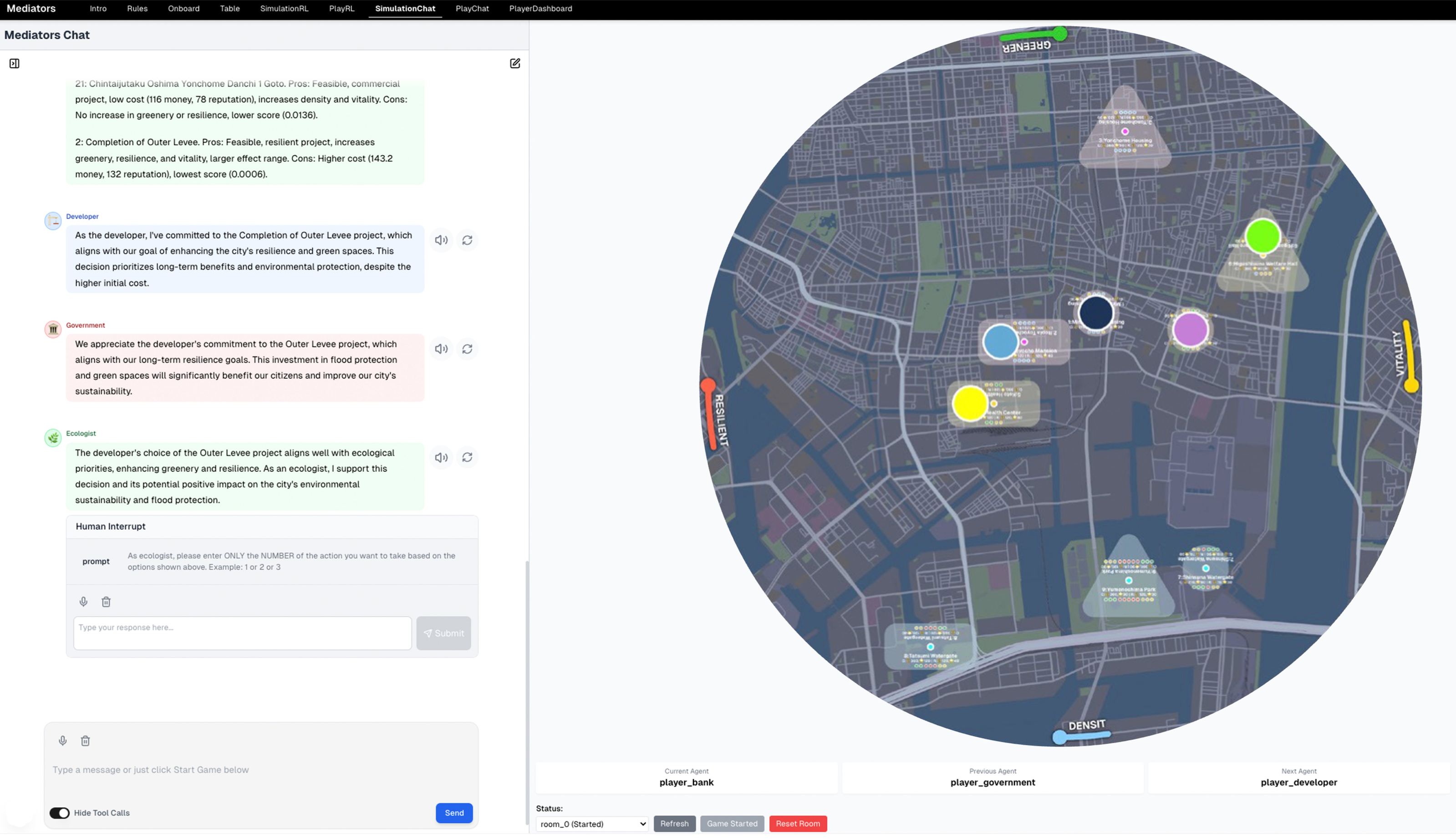

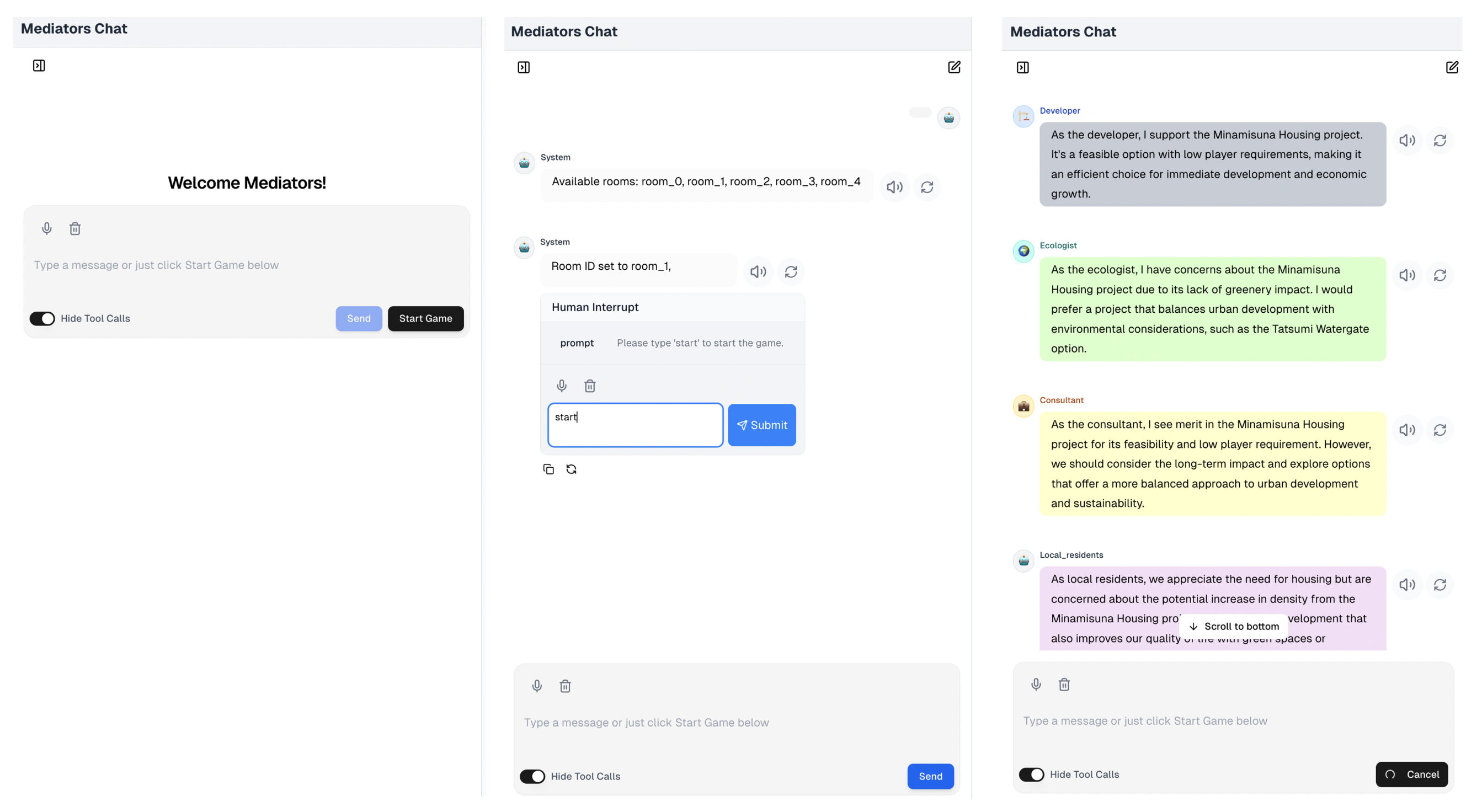

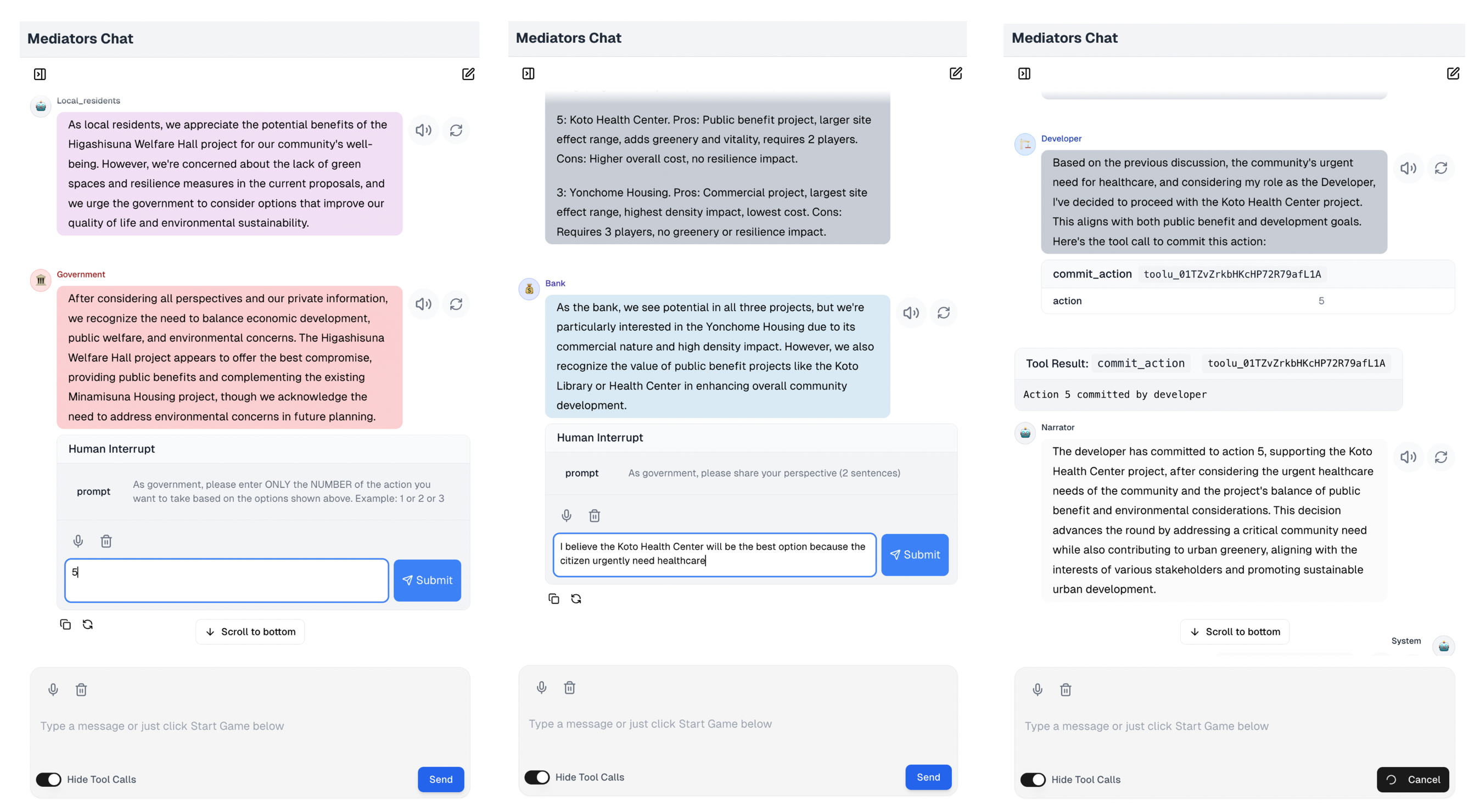

Interactive Multi-Agent Strategic Game

The core gameplay consists of a repetitive sequence, besides game board interactions, to make the game filled with contextual information and improve playability. At the beginning of each round, a short Introduction will provide contextual information to help players get informed of the current urbanization context and priorities. Then the player takes action in sequence, following the main game rule. At the midpoint of a round, a flood event may be triggered, activating a dedicated view that visualizes the spatial distribution of losses. The players will use such information to refine their decisions in future turns. The round conclusion view is displayed when all players have exhausted their resource tokens. This screen provides a summary of each player’s actions’ impact on the game map.

Game as a Collaborative Decision-Making Tool

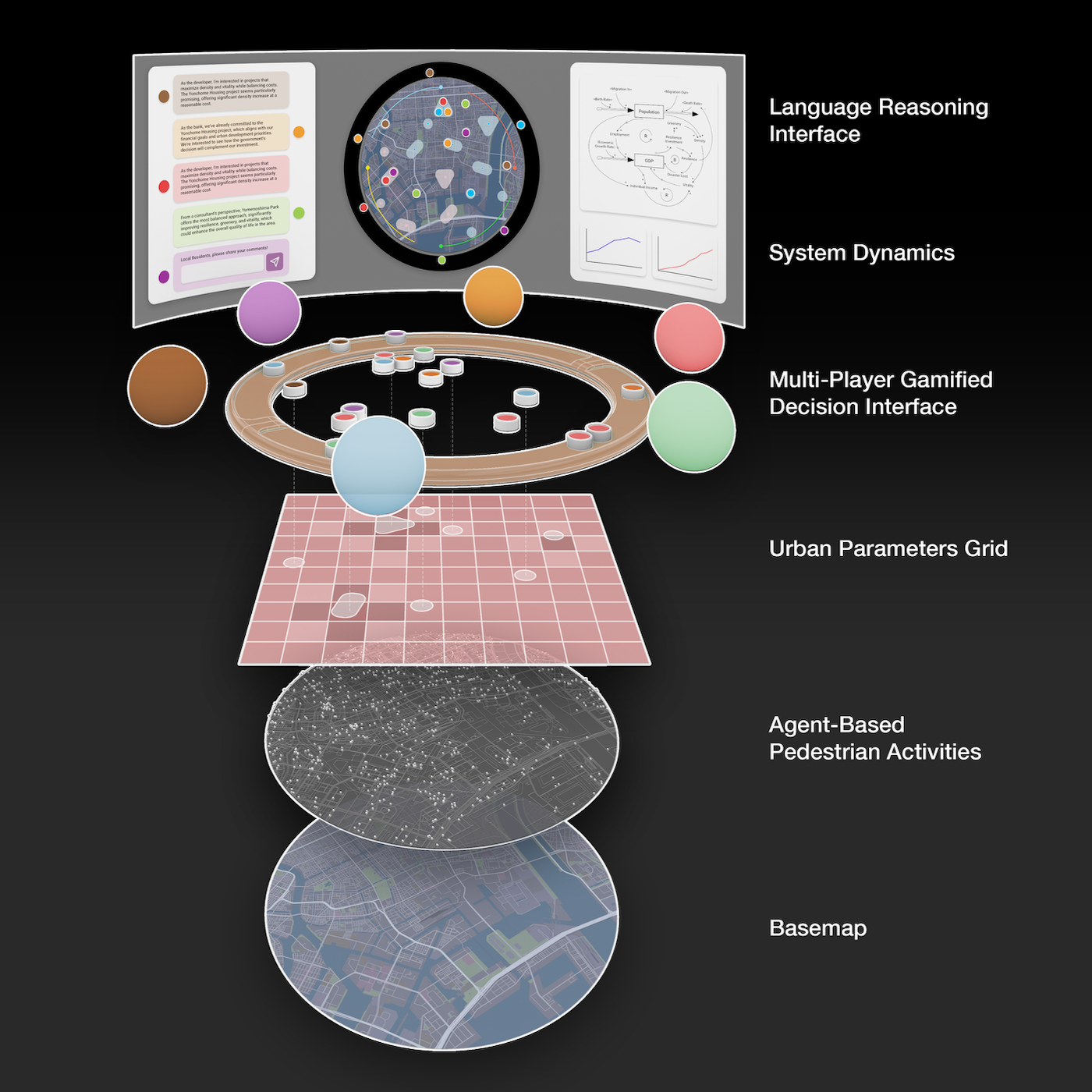

The system is constructed by layers of urban characteristics that cover the fundamental elements of the urban environment. From macroscopic to microscopic, the system creates a multi-dimensional simulation environment that can be interacted with through a game interface. Like the real world, agents (decision-makers) have a limited ability to change the environment. They mainly interact with the environment by affecting the urban grid through building projects. Meanwhile, the system dynamics and agent-based pedestrian activities serve as an auxiliary mechanism to reflect such influences, but do not directly influence the players’ rewards.

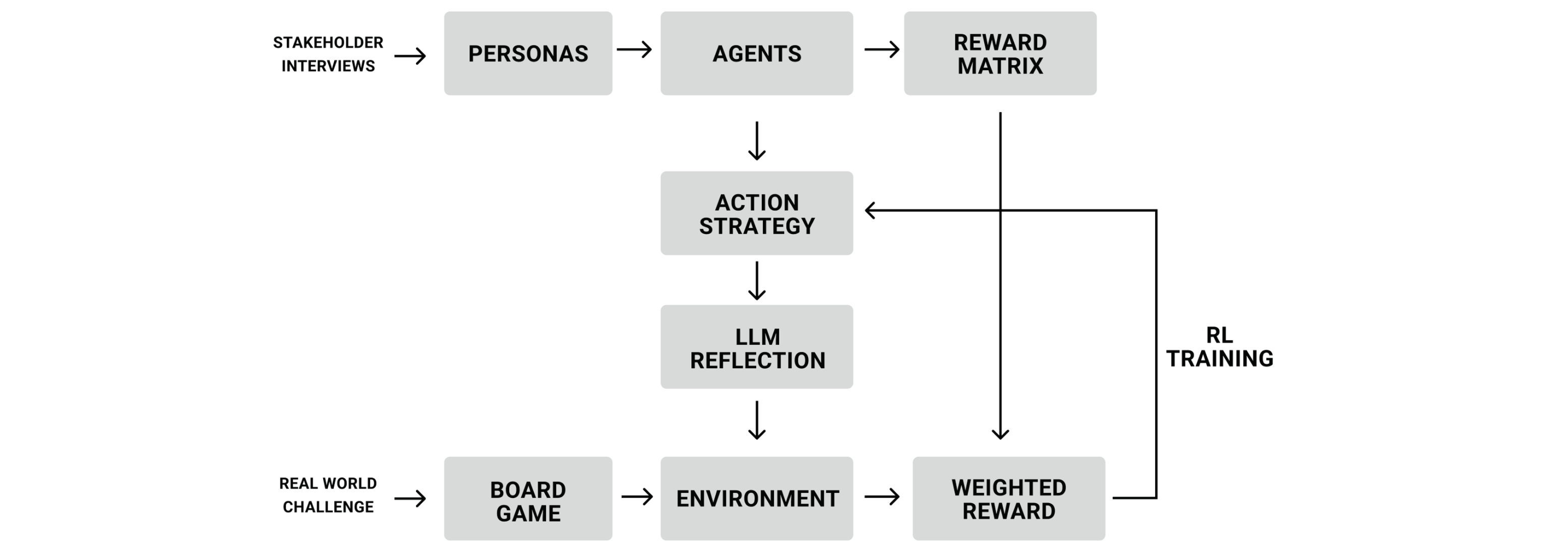

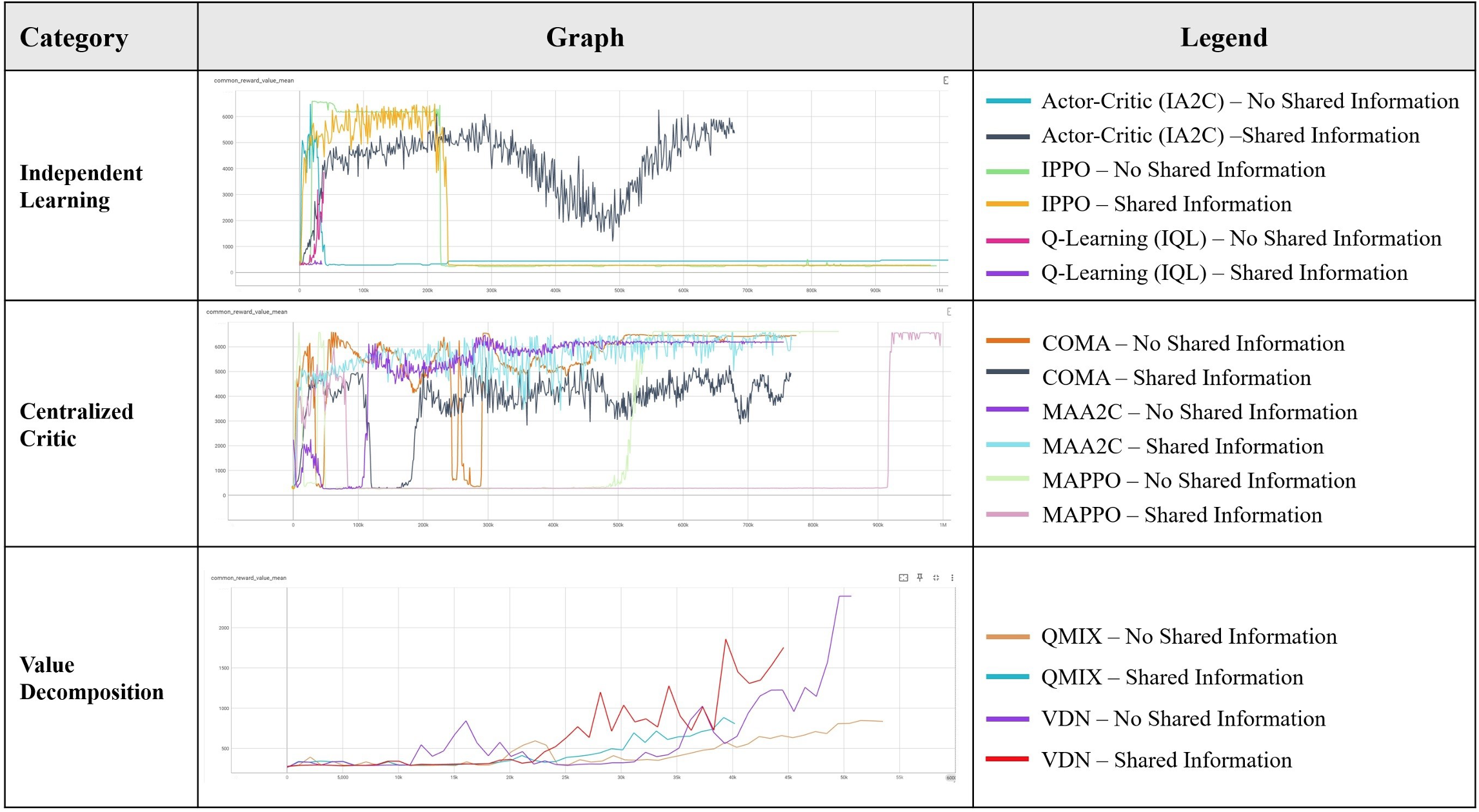

Reinforcement Learning as Strategic Baseline

We can model the player’s decision process as a tree-form decision tree (TFDP) (Farina, 2024). The TFDP structures decision-making as a tree, where each node corresponds to a game state and each branch represents a possible action. A strategy is defined as a probability distribution over the branches at each decision point, marked as question marks in the illustration below.

To translate the game mechanism design into an environment suitable for AI Agent training and evaluation, we model the game environment digitally using the PettingZoo environment with Python. The training is conducted using the ePyMARL framework (Papoudakis et al., 2021). We selected MAPPO and MAA2C, algorithms demonstrated to be effective in that environment, to train our agents. In our MARL-driven simulation, we observed agents are more likely to choose cooperative actions when making autonomous decisions. This behavior aligns with expectations, as cooperative actions are incentivized in the reward structure. Below is a typical simulation round.

Gameplay Driven by Human-Agent Negotiation

We extend the ReAct Agents to integrate reasoning and tool calls into the chain-of-thought, enabling interaction with the game server and trained MARL model as a quantitative reasoning engine. We integrate human feedback into the generative agent discussion loop at two critical decision points: discussion and action commitment. During each agent’s turn, all other agents are prompted sequentially to give feedback. When the current role is defined as human, the loop is paused and waits for a human to input their feedback in plain text. The human feedback becomes part of the message history and can influence downstream reasoning and decision-making by other agents.

Tokens and Hardware

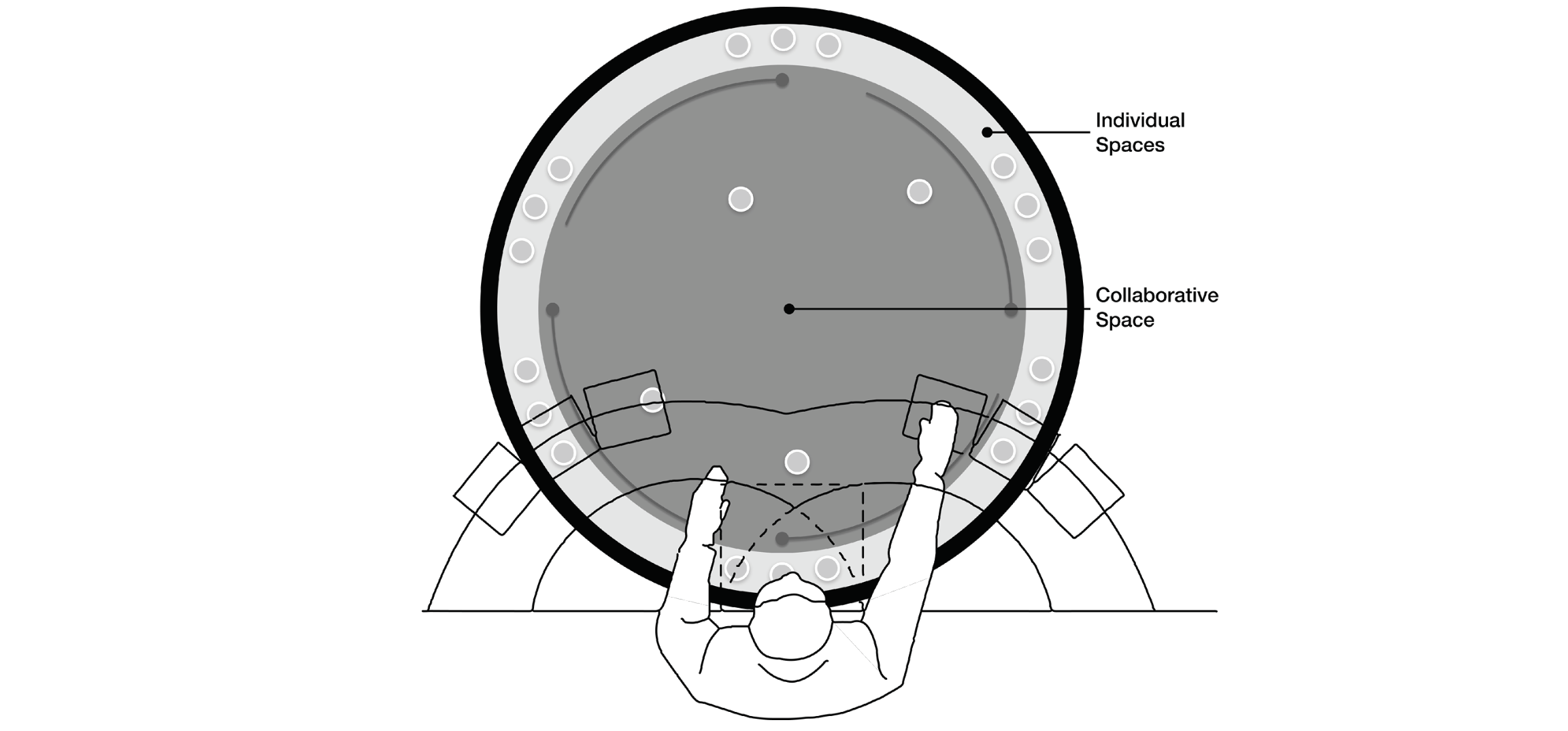

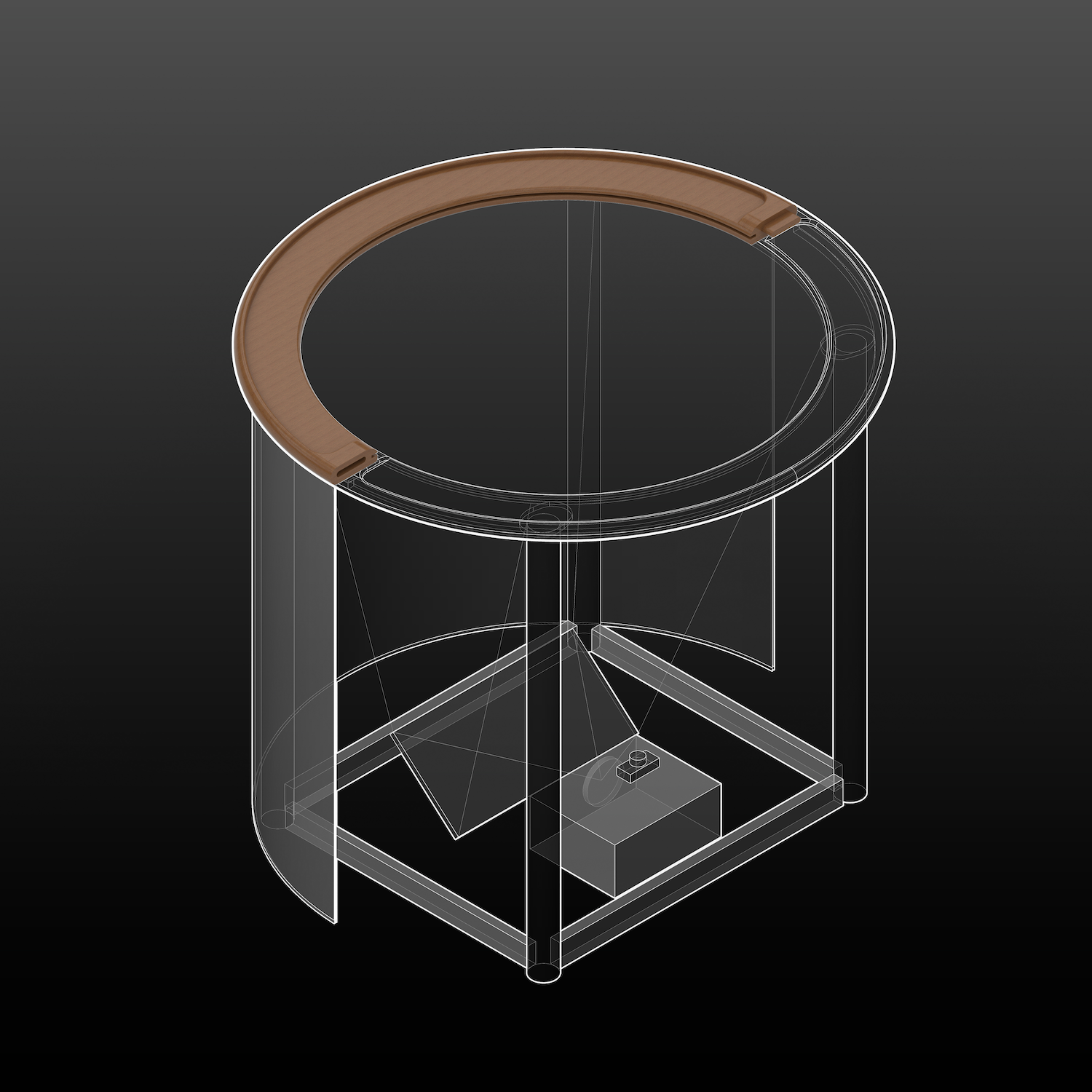

The centerpiece of our physical interface is a custom-designed table that serves as an interactive display platform that supports multiple players. The table is engineered together to serve as a component of the game board.

We designed and build a tangible token element to represent the actions of the players. The token is read by the wide-angle camera beneath the transparent rear-projection screen. The system uses OpenCV’s ArUco module to detect ArUco markers attached to the bottom of each token.