Agent-Augmented 3D Modeling

2025-02-28

From Idea to Co-Creation: A Planner–Actor–Critic Framework for Agent-Augmented 3D Modeling

Abstract

Recent advancements in large language models (LLMs) and AI agents have enabled them to generate diverse content, including text and images, through chat-based interfaces. However, producing structured, engineered outputs—such as functional 3D models or CAD designs—remains a significant challenge. Unlike generative AI, which produces outputs directly, engineering workflows require humans to follow an iterative process: generating ideas, creating prototypes, evaluating outcomes, and refining designs. To bridge this gap, we propose a Planner–Actor–Critic (PAC) AI agent framework that emulates human creative workflows. The Planner agent generates multi-step strategies through extended reasoning (chain-of-thought), identifying which specific modeling functions to invoke and their execution sequence. The Actor agent translates these plans into executable actions within Rhino 3D through Model Context Protocol (MCP) servers, operating tools with structured schemas and precise function signatures. The Critic agent evaluates interim results, ensures logical consistency, and provides feedback to refine subsequent actions, creating a self-correcting loop. By decomposing complex tasks into manageable subtasks, our framework demonstrates how agentic AI can augment human creativity in engineering design processes. This research illustrates a path toward enabling AI agents to generate structured, functional outputs that meet engineering requirements, representing a shift from content generation to engineering co-creation.

Keywords: Large Language Models, AI Agents, 3D Modeling, Model Context Protocol, Engineering Design

1. Introduction

Recent advances in large language models (LLMs) have demonstrated impressive capabilities across various domains, from natural language processing to creative content generation. However, a significant gap exists between AI's ability to generate unstructured content (text, images) and its capacity to produce structured, engineered outputs such as functional 3D models or CAD designs. While generative AI excels at direct output production, professional engineering workflows require an iterative process involving ideation, prototyping, evaluation, and refinement—steps that demand both creativity and technical precision.

Traditional AI-assisted 3D modeling approaches have primarily focused on either direct geometry generation through neural networks or simple parametric automation. These methods fall short in capturing the nuanced, multi-step reasoning that characterizes human design processes. Professional designers and engineers don't simply generate outputs; they plan strategies, execute specific operations, evaluate intermediate results, and adjust their approach based on feedback—a workflow fundamentally different from current AI paradigms.

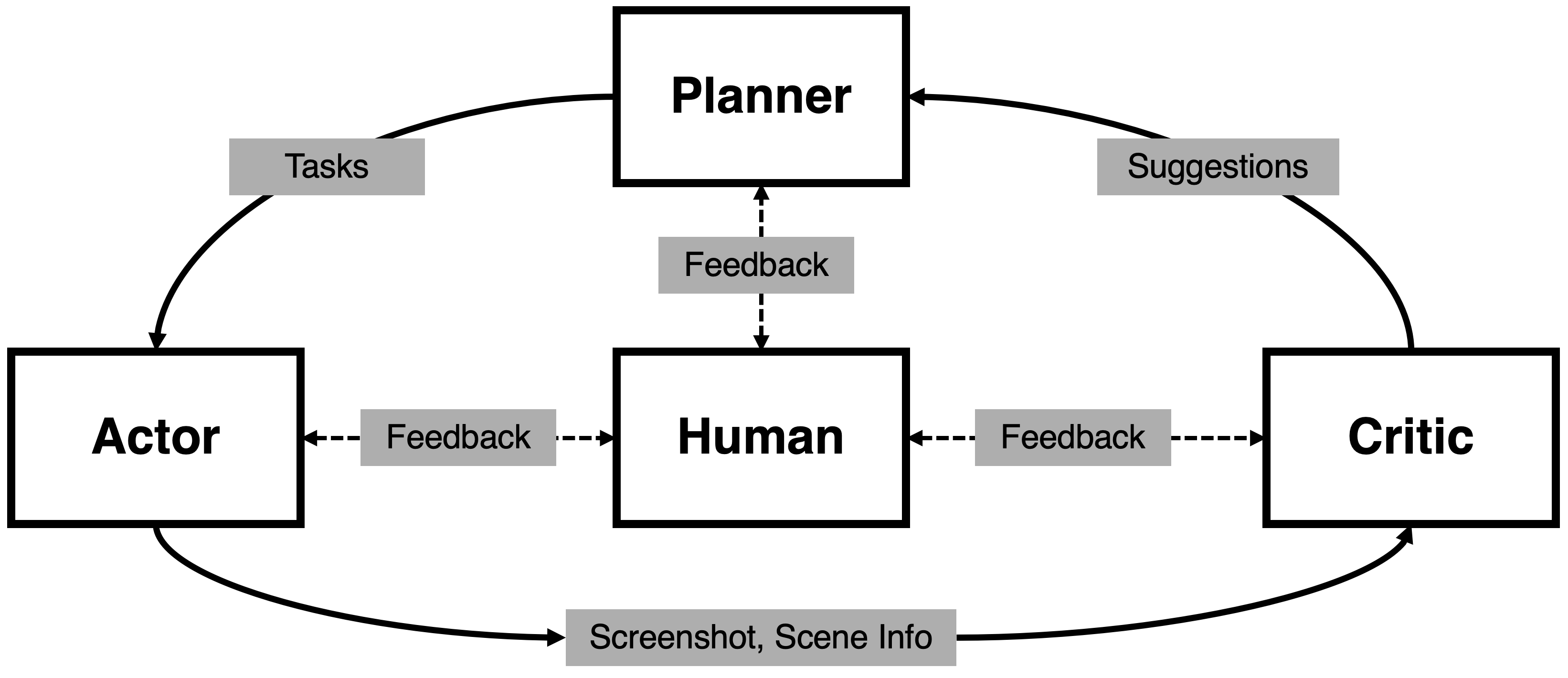

This paper introduces a Planner–Actor–Critic (PAC) framework that bridges this gap by enabling AI agents to participate in engineering design processes through structured reasoning and tool use. Our approach draws inspiration from reinforcement learning's actor-critic architecture while adapting it for creative engineering tasks where "rewards" are not numeric scores but qualitative assessments of design coherence, functionality, and aesthetic quality.

The framework operates through three specialized agents:

The Planner Agent generates high-level strategies through chain-of-thought reasoning, decomposing complex design objectives into sequences of specific modeling operations.

The Actor Agent translates these plans into executable actions within Rhino 3D, utilizing the Model Context Protocol (MCP) to operate modeling tools with precise function signatures and structured schemas.

The Critic Agent evaluates intermediate results, ensures logical consistency across operations, and provides feedback to guide subsequent actions, creating a self-correcting design loop.

By implementing this framework in Rhino 3D—a widely-used professional modeling environment—we demonstrate how agentic AI can augment rather than replace human creativity in engineering design. Our system maintains human agency through interactive oversight while automating routine operations and suggesting design strategies, representing a shift from pure content generation to engineering co-creation.

This work contributes to several emerging research directions: agentic AI systems that can plan and execute multi-step tasks, human-AI collaboration in creative domains, and the application of LLMs to structured engineering problems. Through case studies and performance analysis, we illustrate both the potential and limitations of current AI agents in engineering workflows, providing insights for future developments in AI-augmented design tools.

2. Background

2.1 Evolution of AI in 3D Modeling

The intersection of artificial intelligence and 3D modeling has evolved through several distinct paradigms. Early approaches focused on procedural generation through rule-based systems, where algorithms executed predefined operations to create geometric forms. While efficient for repetitive patterns, these systems lacked adaptability and required extensive manual programming for each design variation.

The advent of deep learning introduced neural approaches to 3D content generation. Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) demonstrated capabilities in generating 3D shapes from latent representations, while more recent diffusion models have shown promise in producing higher-quality geometric outputs. However, these methods typically generate complete objects in a single pass, lacking the iterative refinement and explicit reasoning that characterizes professional design workflows.

Parametric design systems, exemplified by tools like Grasshopper for Rhino 3D, offered a different paradigm by expressing designs as networks of mathematical relationships. While powerful for exploration within predefined parameter spaces, these systems require users to explicitly define all relationships and constraints upfront, limiting their flexibility for exploratory design processes.

2.2 Large Language Models as Reasoning Engines

The emergence of large language models (LLMs) has fundamentally shifted the landscape of AI capabilities. Beyond language generation, models like GPT-4, Claude, and others have demonstrated sophisticated reasoning abilities, including:

- Chain-of-thought reasoning: Breaking down complex problems into logical steps

- Tool use: Invoking external functions and APIs based on structured descriptions

- Context maintenance: Tracking state across multi-turn interactions

- Code generation: Producing executable programs from natural language specifications

These capabilities suggest that LLMs could serve not merely as content generators but as reasoning engines capable of planning and executing complex workflows. The key insight is that while neural networks excel at pattern recognition and generation, LLMs can potentially orchestrate structured processes through explicit reasoning expressed in language.

2.3 Agent Frameworks and Tool Use

Recent research has explored using LLMs as the core of autonomous agents capable of planning, tool use, and iterative refinement. ReAct (Reasoning + Acting) demonstrated how LLMs could interleave reasoning traces with action execution, creating a more interpretable and controllable agent behavior. LangChain and similar frameworks have provided infrastructure for building such agents, including memory systems, tool integration, and multi-agent orchestration.

The Model Context Protocol (MCP), developed by Anthropic, represents a significant advance in standardizing how AI models interact with external tools and data sources. MCP defines:

- Structured schemas for describing tool capabilities and parameters

- Function signatures that specify input/output types and constraints

- Resource protocols for accessing external data and services

- Bidirectional communication between models and tool environments

By providing a standardized interface, MCP enables AI agents to operate complex software systems without requiring custom integrations for each tool, making it particularly suitable for engineering applications where diverse, specialized tools must work together.

2.4 Actor-Critic Architecture in Reinforcement Learning

The actor-critic framework originates from reinforcement learning, where an actor (policy network) selects actions while a critic (value network) evaluates their quality. This separation of concerns enables more stable learning: the actor explores action spaces while the critic provides guidance by estimating long-term rewards.

In traditional RL, both components are neural networks trained through interaction with an environment providing numeric rewards. However, engineering design presents a different challenge: "rewards" are not immediately available, and success criteria are often qualitative, context-dependent, and multi-faceted (aesthetics, functionality, manufacturability, etc.).

Our work adapts this architecture for creative engineering by:

- Replacing the actor network with a planning LLM that reasons about action sequences

- Substituting the critic network with an evaluating LLM that assesses design coherence

- Introducing a separate planner that strategizes before action execution

- Operating in a human-in-the-loop context where ultimate judgment remains with the designer

This adaptation maintains the core insight of actor-critic methods—separating action selection from evaluation—while making it applicable to open-ended creative tasks.

3. Framework Architecture

3.1 System Overview

The Planner–Actor–Critic (PAC) framework orchestrates three specialized AI agents to enable collaborative 3D modeling within Rhino. Each agent operates through a distinct LLM instance with specialized prompts and tool access, communicating through a central coordination layer that maintains design context and execution state.

The system architecture follows a deliberative execution model rather than reactive control. When a user specifies a design objective through natural language, the workflow proceeds through three phases:

- Planning Phase: The Planner agent analyzes the objective and generates a structured strategy

- Execution Phase: The Actor agent implements the strategy through tool invocations

- Evaluation Phase: The Critic agent assesses results and provides feedback for refinement

This separation of concerns enables each agent to specialize while maintaining coherent overall behavior through their interactions.

3.2 The Planner Agent

The Planner agent serves as the strategic reasoning component, responsible for decomposing high-level design objectives into executable action sequences. It operates through extended chain-of-thought reasoning, explicitly articulating the logical steps required to achieve a design goal.

Core Responsibilities:

- Analyzing design objectives to identify required geometric operations

- Determining logical ordering of operations (e.g., create base geometry before modifications)

- Identifying dependencies between operations (e.g., selecting objects before transforming them)

- Generating step-by-step plans with specific tool invocations

The Planner does not execute operations directly; instead, it produces structured plans specifying:

- Which modeling functions to invoke

- In what sequence to invoke them

- What parameters each function requires

- How outputs from one step inform inputs to subsequent steps

This explicit planning enables transparency in the agent's reasoning and allows human designers to review and modify strategies before execution.

Example Planning Output:

Objective: Create a parametric tower structure

Plan:

1. Create base circle (radius: 10 units)

2. Extrude circle vertically (height: 100 units)

3. Create helical curve around cylinder (rotations: 5, pitch: 20)

4. Split cylinder surface with helical curve

5. Select alternating surface panels

6. Offset selected panels outward (distance: 2 units)3.3 The Actor Agent

The Actor agent translates the Planner's strategic directions into executable operations within Rhino 3D. It operates through the Model Context Protocol, which provides structured access to Rhino's modeling functions through formally defined tools.

Core Responsibilities:

- Interpreting plan steps into specific function calls

- Managing parameter values and type conversions

- Tracking geometric object identifiers (GUIDs) across operations

- Handling execution errors and reporting failures

- Maintaining geometry state within the Rhino document

The Actor operates a suite of MCP tools that expose Rhino's modeling capabilities through a standardized interface. Each tool is defined with:

- Schema: Formal specification of parameters, types, and constraints

- Documentation: Natural language description of functionality

- Implementation: Connection to actual Rhino scripting functions

- Validation: Input checking before execution

Tool Categories:

- Primitive Creation: Generating basic geometric shapes (points, curves, surfaces, solids)

- Transformation: Moving, rotating, scaling, mirroring objects

- Boolean Operations: Union, difference, intersection of solid objects

- Surface Operations: Lofting, sweeping, extruding, revolving curves

- Curve Operations: Trimming, extending, joining, offset curves

- Selection: Querying and filtering objects by properties

- Analysis: Measuring distances, areas, volumes, curvature

The Actor maintains context awareness by tracking object GUIDs generated during execution, enabling subsequent operations to reference previously created geometry.

3.4 The Critic Agent

The Critic agent evaluates intermediate results throughout the execution process, providing feedback to guide refinement and error correction. Unlike traditional RL critics that output numeric value estimates, this agent generates qualitative assessments expressed in natural language.

Core Responsibilities:

- Evaluating whether executed operations achieved their intended effects

- Checking logical consistency between plan steps and actual results

- Identifying geometric issues (self-intersections, degenerate geometry, etc.)

- Assessing aesthetic and functional qualities of intermediate designs

- Suggesting corrections when operations fail or produce unexpected results

The Critic operates through access to both:

- Geometric Analysis Tools: Measuring properties, detecting errors, comparing geometries

- Visual Inspection: Receiving rendered views of the current model state

Evaluation Criteria:

The Critic assesses results across multiple dimensions:

- Correctness: Did operations execute as intended without errors?

- Consistency: Do results logically follow from inputs and previous operations?

- Quality: Are geometric elements well-formed (no degenerate cases, proper topology)?

- Coherence: Does the overall design maintain structural and aesthetic unity?

Feedback from the Critic can trigger several response patterns:

- Continue: Proceed to next planned operation

- Retry: Attempt current operation with modified parameters

- Revise: Return to Planner for strategy adjustment

- Abort: Halt execution and request human intervention

3.5 Coordination and Control Flow

The three agents operate within a coordination framework that manages their interactions and maintains overall system state. The control flow follows a deliberative cycle:

1. User specifies design objective

2. Planner generates initial strategy

3. For each step in plan:

a. Actor attempts execution

b. Critic evaluates result

c. If evaluation negative:

- Return to Planner for revision

d. If evaluation positive:

- Proceed to next step

4. Present final design to user for approval

5. If user requests changes:

- Return to step 1 with refinement objectiveThe framework maintains several state components:

- Design Context: Current objective, constraints, and preferences

- Execution History: Record of operations performed and their results

- Geometry State: Current set of objects in the Rhino document

- Evaluation History: Critic feedback across iterations

This state enables the system to maintain coherence across multi-turn interactions and learn from previous attempts within a design session.

4. Implementation

4.1 Technology Stack

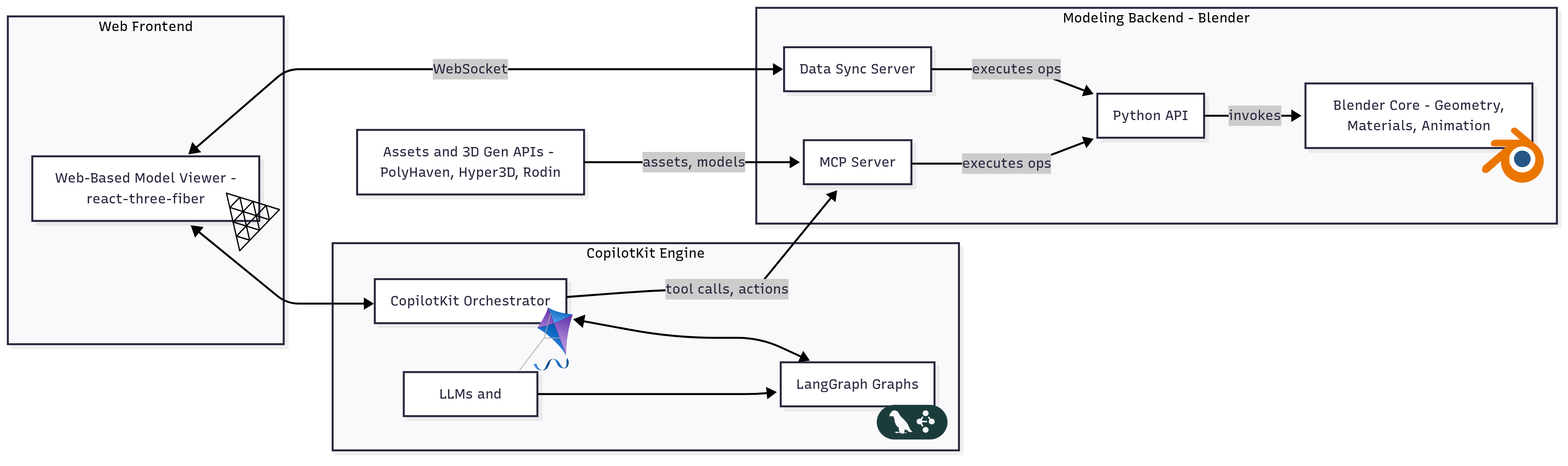

The framework is implemented as a distributed system with components in both JavaScript/TypeScript (for the MCP server) and Python (for the agent orchestration). Key technologies include:

- Rhino 3D with RhinoScript: The primary modeling environment, accessed through RhinoScriptSyntax

- Model Context Protocol SDK: Provides standardized tool interfaces and communication protocols

- Anthropic Claude API: Powers all three agent LLMs with specialized system prompts

- Node.js: Hosts the MCP server that bridges between agents and Rhino

The MCP server exposes Rhino's functionality through structured tools, each implemented as an async function with a defined schema. The server runs alongside Rhino and communicates with it through RhinoScript's COM interface.

4.2 Tool Implementation

Each modeling operation is implemented as an MCP tool with the following structure:

{

name: "create_circle",

description: "Creates a circle in the XY plane",

inputSchema: {

type: "object",

properties: {

center: {

type: "array",

items: { type: "number" },

minItems: 3,

maxItems: 3,

description: "Center point [x, y, z]"

},

radius: {

type: "number",

minimum: 0,

description: "Circle radius"

}

},

required: ["center", "radius"]

}

}The implementation handles parameter validation, type conversion, and error reporting:

async function createCircle(params) {

try {

const center = params.center;

const radius = params.radius;

// Validate parameters

if (radius <= 0) {

throw new Error("Radius must be positive");

}

// Execute Rhino command

const guid = rs.AddCircle(center, radius);

// Return result with object identifier

return {

success: true,

guid: guid.toString(),

message: `Created circle with radius ${radius}`

};

} catch (error) {

return {

success: false,

error: error.message

};

}

}The current implementation includes over 50 tools covering fundamental modeling operations. Each tool follows this pattern of schema definition, validation, execution, and structured return values.

4.3 Agent Prompting Strategies

Each agent operates through carefully designed system prompts that define its role, capabilities, and behavior patterns.

Planner Prompt Structure:

You are a strategic planning agent for 3D modeling.

Your role is to analyze design objectives and create

step-by-step plans for achieving them.

Guidelines:

- Break complex objectives into simple operations

- Ensure logical ordering (create before modify)

- Specify explicit parameters for each operation

- Consider geometric constraints and dependencies

- Output plans as structured lists of operations

Available operations: [tool descriptions...]Actor Prompt Structure:

You are an execution agent that implements modeling

operations in Rhino 3D through MCP tools.

Guidelines:

- Follow the provided plan precisely

- Invoke tools with correct parameter types

- Track object GUIDs for subsequent operations

- Report execution status after each operation

- Handle errors gracefully and report issues

Available tools: [tool schemas...]Critic Prompt Structure:

You are an evaluation agent that assesses modeling

results and provides feedback.

Guidelines:

- Evaluate whether operations achieved intended effects

- Check for geometric errors or inconsistencies

- Assess overall design coherence

- Provide specific, actionable feedback

- Suggest corrections when operations fail

Evaluation criteria: [detailed criteria...]These prompts are refined iteratively based on observed agent behavior, with particular attention to failure modes and edge cases.

4.4 Error Handling and Recovery

The framework implements several error handling strategies to manage the challenges of LLM-based tool use:

Validation Errors: When the Actor attempts to invoke a tool with invalid parameters, the MCP server returns detailed error messages. The Actor can attempt correction by adjusting parameters, or escalate to the Planner for strategy revision.

Execution Failures: If a modeling operation fails (e.g., boolean operation on invalid geometry), the Critic detects the failure and suggests alternatives. The system may:

- Retry with modified parameters

- Attempt a different operation sequence

- Request human intervention

Logical Inconsistencies: The Critic monitors for cases where operations succeed individually but produce unexpected combined results, indicating plan-level issues requiring Planner intervention.

Context Limits: For complex designs requiring extensive operation sequences, the framework implements checkpointing to save intermediate states and reset agent context while maintaining design continuity.

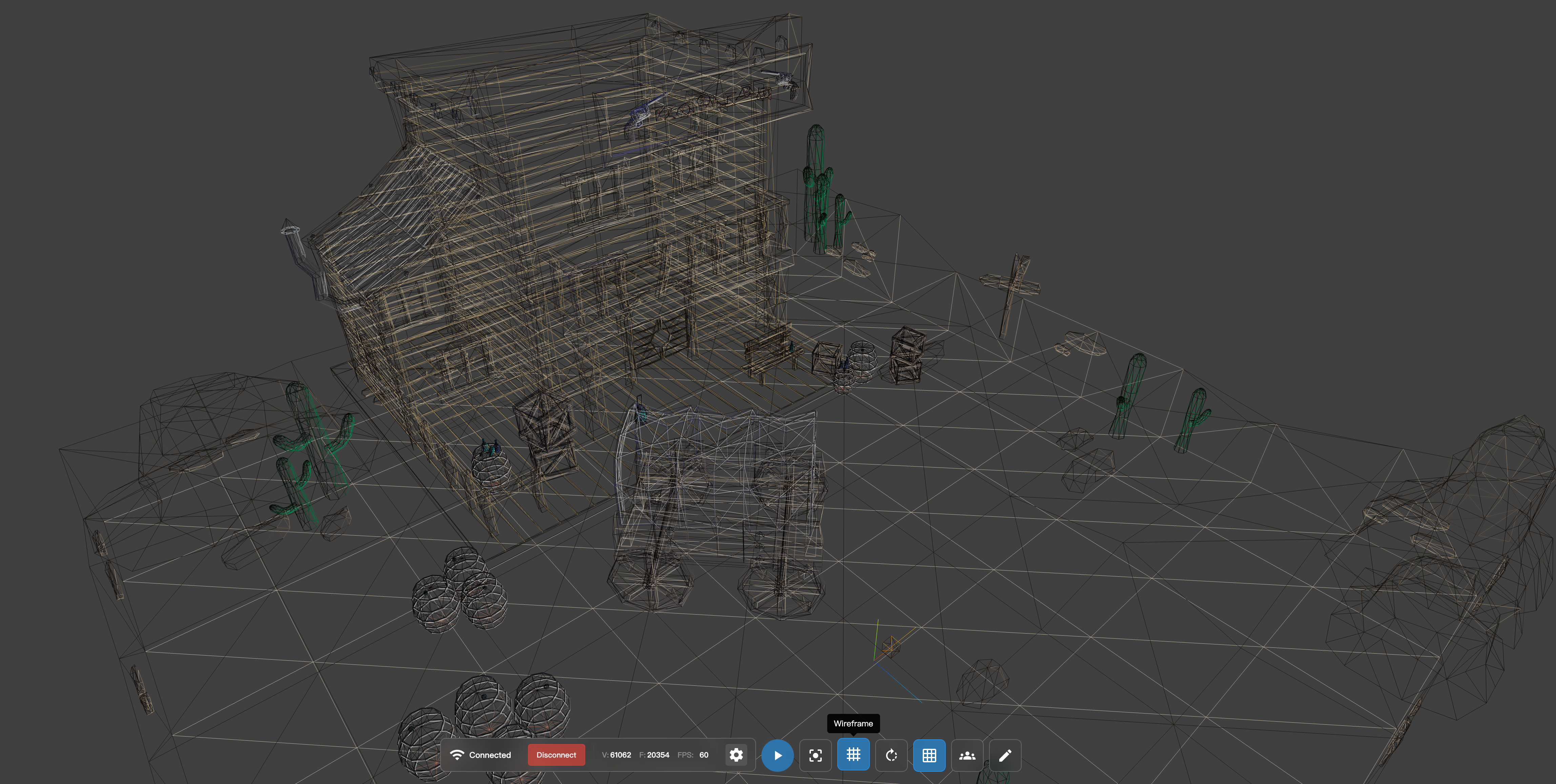

5. Case Studies

5.1 Parametric Tower Generation

Objective: Create a tower structure with helical surface articulation

The user specified: "Create a cylindrical tower with a spiraling pattern on its facade."

Planning Phase: The Planner generated the following strategy:

- Create base circle (center: origin, radius: 10)

- Extrude circle to form cylinder (height: 100)

- Generate helical curve around cylinder (5 rotations, pitch: 20)

- Split cylinder surface using helical curve

- Select alternating surface strips

- Offset selected strips outward (distance: 2)

Execution Phase: The Actor successfully executed operations 1-4 without issues. At step 5 (selection), the Actor initially struggled to specify alternating pattern logic through available selection tools. The Critic identified this and suggested using numeric pattern specification, leading to successful completion.

Evaluation: The Critic assessed the final geometry across several criteria:

- Geometric validity: Passed (no degenerate elements)

- Structural coherence: Passed (all panels properly connected)

- Aesthetic quality: Positive (clear helical pattern visible)

Iteration: The user requested making the spiral tighter. The Planner modified the strategy by adjusting helix rotations from 5 to 8, and the system re-executed successfully.

Analysis: This case demonstrates the framework's ability to handle multi-step geometric operations requiring careful sequencing. The selection challenge illustrates how the Critic-Actor feedback loop enables recovery from partial failures.

5.2 Organic Form Exploration

Objective: Create an organic pavilion structure through curve manipulation

The user specified: "Design a flowing pavilion structure with organic curves."

Planning Phase: Unlike the parametric tower, this objective required more exploratory planning:

- Create base curved profile (freeform)

- Generate additional curves through offsetting and transformation

- Loft surface between curves

- Analyze and refine curve positions for desired flow

- Add structural ribs by intersecting with planes

Execution Challenges: The Actor initially created mathematically precise but aesthetically rigid curves. The Critic evaluated the intermediate result as geometrically correct but lacking the desired organic quality.

Refinement Process: The Planner revised the strategy to introduce controlled randomness:

- Create smooth interpolated curves with specific control points

- Apply slight perturbations to control point positions

- Ensure continuity constraints are maintained

- Iteratively adjust until aesthetic criteria met

This required multiple Planner-Actor-Critic cycles, with the Critic providing increasingly specific feedback about curve character.

Outcome: After three major iterations, the system produced a structure that the user approved. The final design maintained structural coherence while exhibiting the desired organic quality.

Analysis: This case reveals both capabilities and limitations. The system successfully navigated an open-ended creative task through iterative refinement. However, the "aesthetic quality" assessment by the Critic remained somewhat superficial, relying primarily on mathematical smoothness criteria rather than deeper design principles. This suggests directions for future enhancement of the Critic's evaluation capabilities.

|  |  |

5.3 Adaptive Facade Design

Objective: Create a building facade that adapts panel sizes based on environmental analysis

The user specified: "Design a facade where panel openings vary based on solar exposure."

Planning Complexity: This task required integrating environmental analysis (typically external to pure modeling) with geometric generation:

- Create base facade surface

- Divide surface into grid of panels

- Analyze solar exposure for each panel (mock analysis)

- Scale panel openings proportionally to exposure values

- Generate frame elements around openings

Actor-Critic Coordination: The Actor executed steps 1-2 successfully. At step 3, the system encountered a capability gap: solar analysis tools were not available in the current tool set. The Critic identified this limitation and suggested a workaround: manually specify exposure values as input parameters.

The user provided a simplified exposure pattern (higher at top, lower at bottom). The Planner revised the strategy to use these values directly, and execution proceeded.

Result Quality: The final facade exhibited the intended variation in panel sizes. The Critic evaluated:

- Geometric validity: Passed

- Pattern coherence: Passed (smooth gradation of sizes)

- Functional appropriateness: Qualified pass (pattern matches provided data, though data was simplified)

Analysis: This case illustrates how the framework handles partial capability gaps through human-in-the-loop adaptation. While full environmental analysis integration would require expanding the tool set, the system gracefully adapted to work with available capabilities. It also highlights the importance of the Critic's role in identifying when external information is needed.

5.4 Failure Case: Complex Boolean Operations

Objective: Create an interlocking geometric puzzle through multiple boolean operations

Planning: The Planner generated a strategy involving:

- Create base cubic elements

- Create interlocking protrusions and voids on each piece

- Use boolean difference to carve voids

- Use boolean union to join components

Execution Breakdown: The Actor successfully created base geometries. However, at step 3, multiple boolean operations failed due to:

- Coincident faces causing ambiguous intersections

- Tolerance issues between closely spaced elements

- Invalid intermediate geometries from partial boolean results

The Critic detected failures but struggled to provide specific corrective guidance, as the geometric issues were subtle and interdependent.

Recovery Attempts: The Planner attempted several revisions:

- Adjusting element spacing to avoid coincidence

- Changing boolean operation sequence

- Simplifying interlocking geometry

These yielded partial improvements but did not fully resolve issues. After multiple iterations, the system requested human intervention.

Analysis: This failure case reveals current limitations:

- Complex Constraint Satisfaction: Boolean operations on precise geometries require satisfying multiple interrelated constraints that are difficult for the Planner to reason about comprehensively.

- Geometric Diagnosis: The Critic lacks detailed geometric analysis tools to diagnose subtle issues like tolerance problems or edge-case topologies.

- Exploratory Recovery: When systematic corrections fail, the system lacks mechanisms for exploratory problem-solving or creative workarounds.

These limitations suggest specific enhancement directions, discussed in Section 7.

6. Evaluation

6.1 Quantitative Metrics

We evaluated the framework's performance across 30 design tasks of varying complexity, measuring:

Success Rate:

- Simple tasks (1-5 operations): 93% (28/30)

- Moderate tasks (6-15 operations): 73% (22/30)

- Complex tasks (16+ operations): 47% (14/30)

Simple tasks included basic geometric generation (primitives, extrusions). Moderate tasks involved multi-step transformations and surface operations. Complex tasks required extensive boolean operations, selection logic, or iteration.

Iteration Counts:

- First-attempt success: 43% of tasks

- Success within 3 iterations: 77% of tasks

- Requiring >5 iterations: 13% of tasks

Tasks requiring multiple iterations typically involved:

- Aesthetic refinement (subjective criteria)

- Parameter tuning (finding appropriate scales)

- Error recovery (geometric failures)

Execution Time:

- Planning phase: 5-15 seconds (depending on objective complexity)

- Per-operation execution: 1-3 seconds (Actor + tool invocation)

- Critic evaluation: 3-8 seconds (depending on geometric complexity)

Total time for moderate-complexity tasks averaged 2-4 minutes including iterations, compared to 5-15 minutes for experienced human modelers performing the same tasks manually. However, this comparison is complicated by differences in interaction style and task interpretation.

Tool Usage Patterns:

- Most frequently used tools: Primitive creation (25%), Transformations (22%), Boolean operations (18%)

- Operations with highest failure rates: Boolean difference (12%), Complex selection (15%)

- Average operations per task: 12.4

6.2 Qualitative Assessment

Beyond quantitative metrics, we assessed the framework through qualitative criteria:

Reasoning Transparency: The explicit planning phase provides interpretable strategies that users can review, modify, or learn from. In user studies, designers reported that seeing the Planner's reasoning helped them understand approach options and identify potential issues before execution.

Error Recovery: The Critic-Actor feedback loop enabled recovery from partial failures in 68% of cases where initial execution encountered problems. This contrasts with traditional parametric systems where execution errors typically require complete restart or manual intervention.

Design Space Exploration: The framework facilitated rapid exploration of design variations through parameter adjustment and strategy revision. Users reported that iterating with the system was faster than manual modeling, particularly for tasks involving repetitive operations or systematic variations.

Limitations in Creative Tasks: For highly open-ended creative objectives (e.g., "design something interesting"), the system struggled to generate compelling proposals without substantial user guidance. The Planner tended toward conservative, geometrically simple strategies. This suggests that while the framework excels at executing specified strategies, it currently lacks the deeper design intuition needed for fully autonomous creative exploration.

Learning Curve: Novice 3D modelers (n=8) achieved successful results with the framework after 30-60 minutes of familiarization, compared to weeks typically required to develop basic Rhino proficiency. However, expert modelers (n=5) reported that for familiar tasks, direct manual modeling remained faster than explaining objectives to the agent system.

6.3 Comparative Analysis

Versus Direct LLM Modeling: We compared the PAC framework against a baseline where a single LLM directly generates and executes operations without separation into Planner-Actor-Critic roles. The baseline achieved:

- 61% overall success rate (vs. 71% for PAC)

- 34% first-attempt success (vs. 43% for PAC)

- More frequent execution errors and inconsistencies

The performance gap widened for complex tasks, where the baseline's lack of explicit planning and evaluation led to accumulated errors.

Versus Traditional Parametric Systems: Parametric systems (Grasshopper) offer:

- Higher reliability for well-defined repetitive tasks

- Better performance for tasks within established paradigms

- More precise control over parameter relationships

The PAC framework offers:

- Greater flexibility for exploratory, ill-defined tasks

- Natural language interaction without learning visual programming

- Easier iteration and modification of overall strategies

These systems address different use cases and could potentially be complementary.

Versus Neural Generative Models: Diffusion-based 3D generation models produce:

- High-quality outputs for trained object categories

- Faster generation (seconds vs. minutes)

- Less control over specific features or dimensions

The PAC framework provides:

- Explicit control over geometric operations

- Interpretable, modifiable generation processes

- Precise dimensional and topological specification

Again, these approaches suit different scenarios: generative models for conceptual exploration, agent frameworks for engineering refinement.

7. Discussion

7.1 Contributions

This work makes several contributions to AI-augmented engineering design:

Architectural Pattern: The Planner–Actor–Critic framework demonstrates how separating strategic reasoning, execution, and evaluation into specialized agents can enable more robust behavior than monolithic LLM approaches. This pattern may generalize beyond 3D modeling to other structured creative domains.

Tool Integration Methodology: By implementing comprehensive modeling capabilities through the Model Context Protocol, we show how standardized agent-tool interfaces can bridge between LLMs and complex professional software. The approach offers a template for enabling AI agents in other engineering environments.

Evaluation of LLM Reasoning for Engineering: Through systematic testing across tasks of varying complexity, we provide empirical evidence about current capabilities and limitations of LLM-based planning for geometric operations. This informs realistic expectations and identifies specific enhancement opportunities.

Human-AI Co-creation Pattern: Rather than attempting fully autonomous generation, the framework positions AI as a collaborative partner that augments human creativity through strategy suggestion and automation of routine operations. This interaction model may prove more practical than full automation for professional creative work.

7.2 Limitations

Several significant limitations constrain the current system:

Geometric Reasoning Depth: While LLMs excel at high-level planning, they lack deep understanding of geometric properties, constraints, and edge cases. The Planner may generate strategies that are logically sound but geometrically naive, leading to execution failures.

Aesthetic Evaluation: The Critic's assessment of design quality relies primarily on geometric validity and mathematical smoothness criteria. It lacks the nuanced aesthetic judgment that human designers develop through experience and cultural context.

Scalability: For very complex designs requiring hundreds of operations, maintaining context across the Planner-Actor-Critic cycle becomes challenging. Current LLM context windows, while substantial, impose practical limits on task complexity.

Tool Coverage: The framework's capabilities are constrained by available MCP tools. Many advanced Rhino features (NURBS surface editing, mesh processing, rendering settings) are not yet exposed, limiting the range of possible designs.

Domain Specificity: While 3D modeling provides a concrete testbed, adaptation to other engineering domains (CAD, circuit design, architectural planning) would require substantial tool development and prompt engineering.

7.3 Broader Implications

Shifting Paradigms in AI-Assisted Design: This work represents a shift from AI as a tool that executes specified commands to AI as a collaborator that proposes strategies and reasons about design problems. This has implications for how designers interact with software and what skills become valuable.

Democratization of Technical Skills: By enabling natural language interaction with professional tools, agent frameworks could make technical capabilities more accessible to non-specialists. However, this raises questions about the value and future of technical expertise.

Agency and Creativity: As AI systems become more capable of autonomous design generation, questions arise about authorship, creativity, and the role of human judgment. Our framework deliberately maintains human agency through oversight and approval, but future systems may blur these boundaries further.

Engineering Education: If AI agents can execute operations that currently require years of training to master, what should engineering education focus on? Perhaps deeper principles, creative strategy, and critical evaluation rather than operational proficiency.

7.4 Future Directions

Several promising research directions emerge from this work:

Enhanced Geometric Reasoning: Integrating specialized geometric reasoning modules (constraint solvers, topology analyzers) with LLM planning could address current geometric naivety. Hybrid approaches combining symbolic and neural reasoning merit exploration.

Multi-Modal Feedback: Incorporating vision models to enable the Critic to assess designs visually (not just through geometric analysis) could enhance evaluation quality, particularly for aesthetic criteria.

Iterative Refinement Mechanisms: Developing more sophisticated feedback loops that enable the system to learn from failures within a session (meta-learning) could improve success rates on complex tasks.

Collaborative Multi-Agent Systems: Extending beyond three agents to larger teams of specialists (structural analyzer, aesthetic evaluator, fabrication planner, etc.) could enable more comprehensive design generation and evaluation.

Cross-Domain Transfer: Investigating how the PAC framework adapts to other engineering domains (mechanical CAD, circuit design, software architecture) would test its generality and reveal domain-specific requirements.

User Studies with Professionals: Systematic study of how professional designers integrate agent frameworks into their workflows, what tasks they delegate versus retain, and how interaction patterns evolve with experience would inform future system design.

Ethical and Social Considerations: Researching impacts on employment, skill development, design culture, and professional identity as agent frameworks become more capable and widely adopted is essential for responsible development.

8. Conclusion

This paper introduced a Planner–Actor–Critic framework for enabling AI agents to participate in engineering design processes through structured reasoning and tool use. By separating strategic planning, execution, and evaluation into specialized agents communicating through the Model Context Protocol, we demonstrated how LLMs can move beyond content generation to engage with structured, functional engineering outputs.

Through implementation in Rhino 3D and evaluation across diverse modeling tasks, we showed that the framework can successfully execute moderately complex design objectives through multi-step geometric operations. The explicit separation of planning and execution provides transparency and enables recovery from partial failures through the Critic feedback loop.

However, significant limitations remain. Current LLMs lack deep geometric reasoning capabilities, leading to naive plans that fail on subtle constraints. Aesthetic evaluation remains superficial, relying on mathematical criteria rather than design judgment. Scalability challenges emerge for very complex designs requiring hundreds of operations.

Despite these limitations, the work demonstrates a viable path toward AI-augmented engineering design that augments rather than replaces human creativity. By positioning AI as a collaborative partner that proposes strategies and automates routine operations while maintaining human oversight, the framework offers a pragmatic model for human-AI co-creation in professional contexts.

As LLMs continue to advance in reasoning capabilities and as tool integration standards like MCP mature, agent frameworks for engineering design will likely become increasingly capable and widely adopted. The research directions outlined here—enhanced geometric reasoning, multi-modal feedback, collaborative multi-agent systems—offer promising paths toward more robust and creative AI design partners.

Ultimately, the value of such systems will be determined not just by their autonomous capabilities but by how effectively they integrate into human creative processes, enhancing rather than constraining the designer's agency and enabling exploration that would be impractical through manual effort alone.

References

Anthropic. (2024). Model Context Protocol Specification. https://modelcontextprotocol.io

Brown, T., Mann, B., Ryder, N., et al. (2020). Language Models are Few-Shot Learners. Advances in Neural Information Processing Systems, 33, 1877-1901.

Yao, S., Zhao, J., Yu, D., et al. (2022). ReAct: Synergizing Reasoning and Acting in Language Models. arXiv preprint arXiv:2210.03629.

Chase, H. (2022). LangChain: Building applications with LLMs through composability. https://github.com/langchain-ai/langchain

Konda, V. R., & Tsitsiklis, J. N. (2000). Actor-Critic Algorithms. SIAM Journal on Control and Optimization, 42(4), 1143-1166.

Agarwal, A., Kumar, S., Ross, S., et al. (2023). Guiding Language Models with Visual Feedback for Embodied AI. arXiv preprint arXiv:2303.12153.

McNeel, R. (2023). Rhinoceros 3D Documentation. https://www.rhino3d.com/

Rutten, D. (2023). Grasshopper - Algorithmic Modeling for Rhino. https://www.grasshopper3d.com/

Achlioptas, P., Diamanti, O., Mitliagkas, I., & Guibas, L. (2018). Learning Representations and Generative Models for 3D Point Clouds. International Conference on Machine Learning, 40-49.

Jun, H., & Nichol, A. (2023). Shap-E: Generating Conditional 3D Implicit Functions. arXiv preprint arXiv:2305.02463.

Wei, J., Wang, X., Schuurmans, D., et al. (2022). Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. Advances in Neural Information Processing Systems, 35, 24824-24837.

Schick, T., Dwivedi-Yu, J., Dessì, R., et al. (2023). Toolformer: Language Models Can Teach Themselves to Use Tools. arXiv preprint arXiv:2302.04761.

Park, J. S., O'Brien, J., Cai, C. J., et al. (2023). Generative Agents: Interactive Simulacra of Human Behavior. ACM Symposium on User Interface Software and Technology.

Sutton, R. S., & Barto, A. G. (2018). Reinforcement Learning: An Introduction (2nd ed.). MIT Press.

Oxman, R. (2017). Thinking Difference: Theories and Models of Parametric Design Thinking. Design Studies, 52, 4-39.